目录

一、节点调度pod

1.使用nodeSelector标签方式分配节点

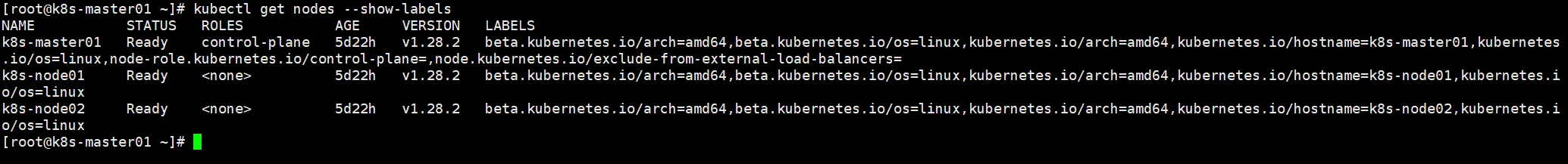

先用命令查询出K8S集群node的标签

jskubectl get nodes --show-labels

然后给对应的节点添加标签

jskubectl label nodes k8s-node01 name=songxuan01

jskubectl label nodes k8s-node02 name=songxuan02

然后给yml文件nodeSelector中的打上对应标签,该pod就会分配到该节点了

ymlapiVersion: v1

kind: Pod

metadata:

name: nginx01

labels:

name: songxuan01

spec:

containers:

- name: nginx01

image: nginx:1.14.2

ports:

- containerPort: 80

imagePullPolicy: IfNotPresent

nodeSelector:

name: songxuan01

restartPolicy: Always

ymlapiVersion: v1

kind: Pod

metadata:

name: nginx02

labels:

name: songxuan02

spec:

containers:

- name: nginx02

image: nginx:1.14.2

ports:

- containerPort: 80

imagePullPolicy: IfNotPresent

nodeSelector:

name: songxuan02

restartPolicy: Always

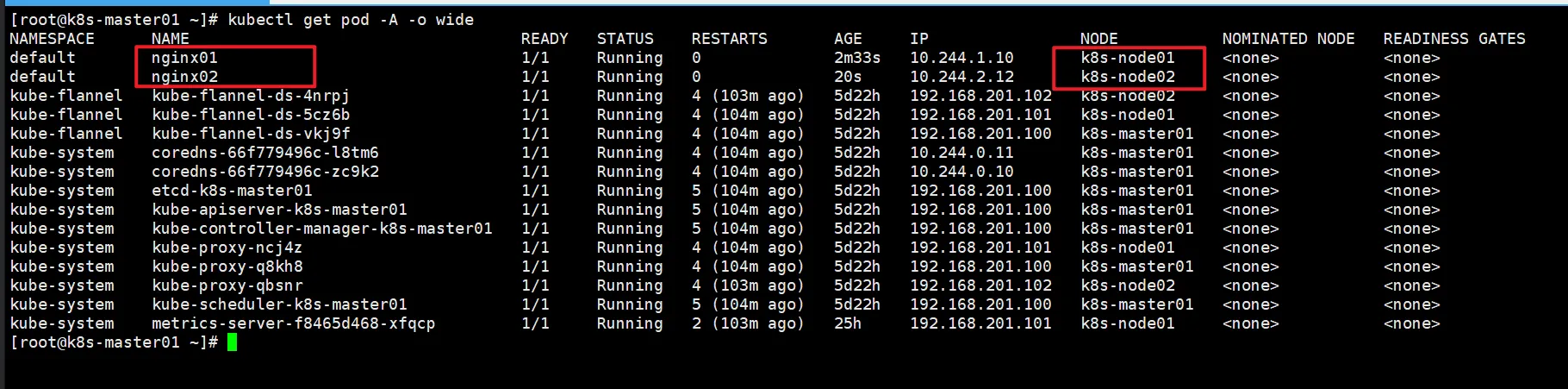

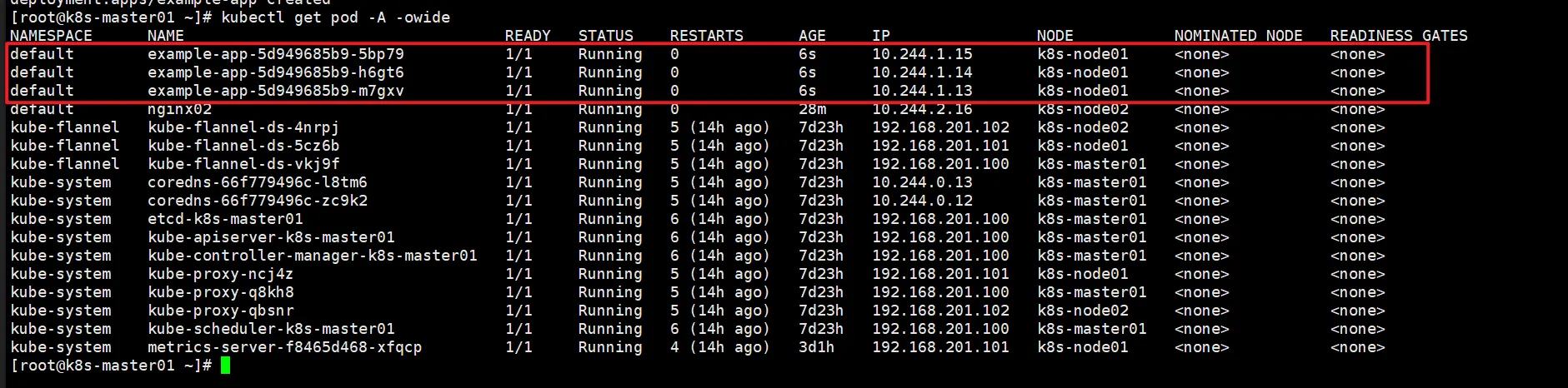

然后查看这两个pod都分配到对应的节点了

注意

如果yml文件中打的标签中找不到有对应标签的node节点就会一直是Pending的状态

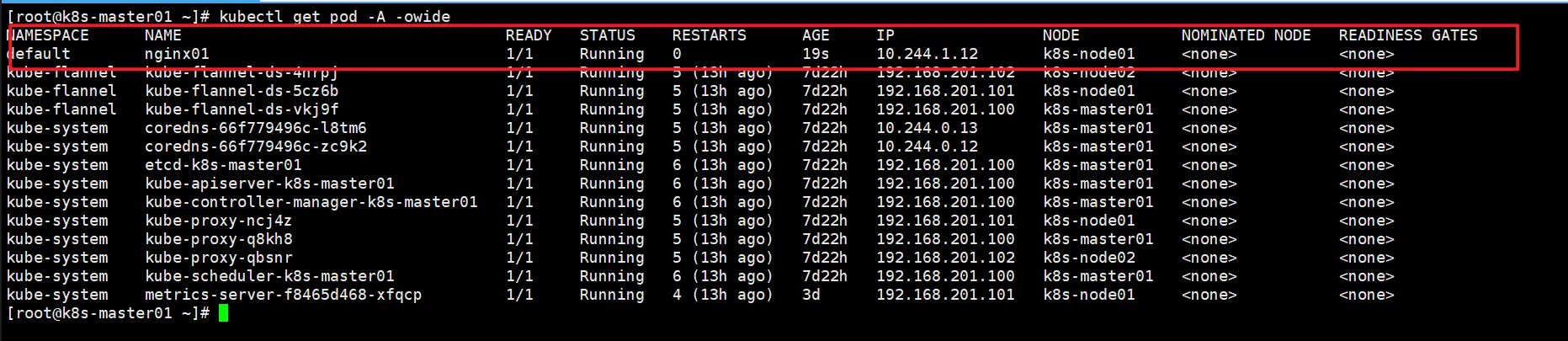

2.使用nodeName指定node节点

使用nodeName: k8s-node02指定到对应节点

ymlapiVersion: v1

kind: Pod

metadata:

name: nginx03

labels:

name: songxuan03

spec:

containers:

- name: nginx03

image: nginx:1.14.2

ports:

- containerPort: 80

imagePullPolicy: IfNotPresent

nodeName: k8s-node02 #指定node节点的名称

restartPolicy: Always

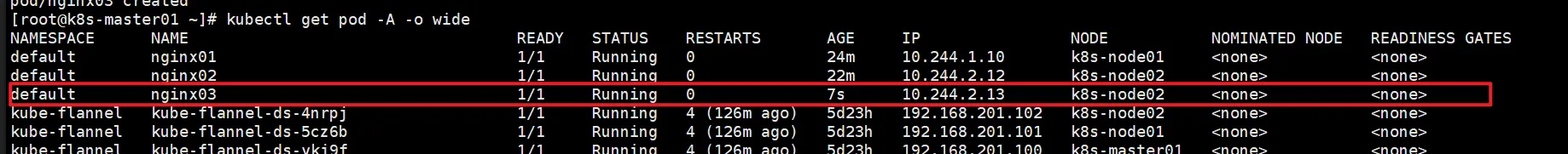

启动pod后,该pod已经在我指定的节点上运行。

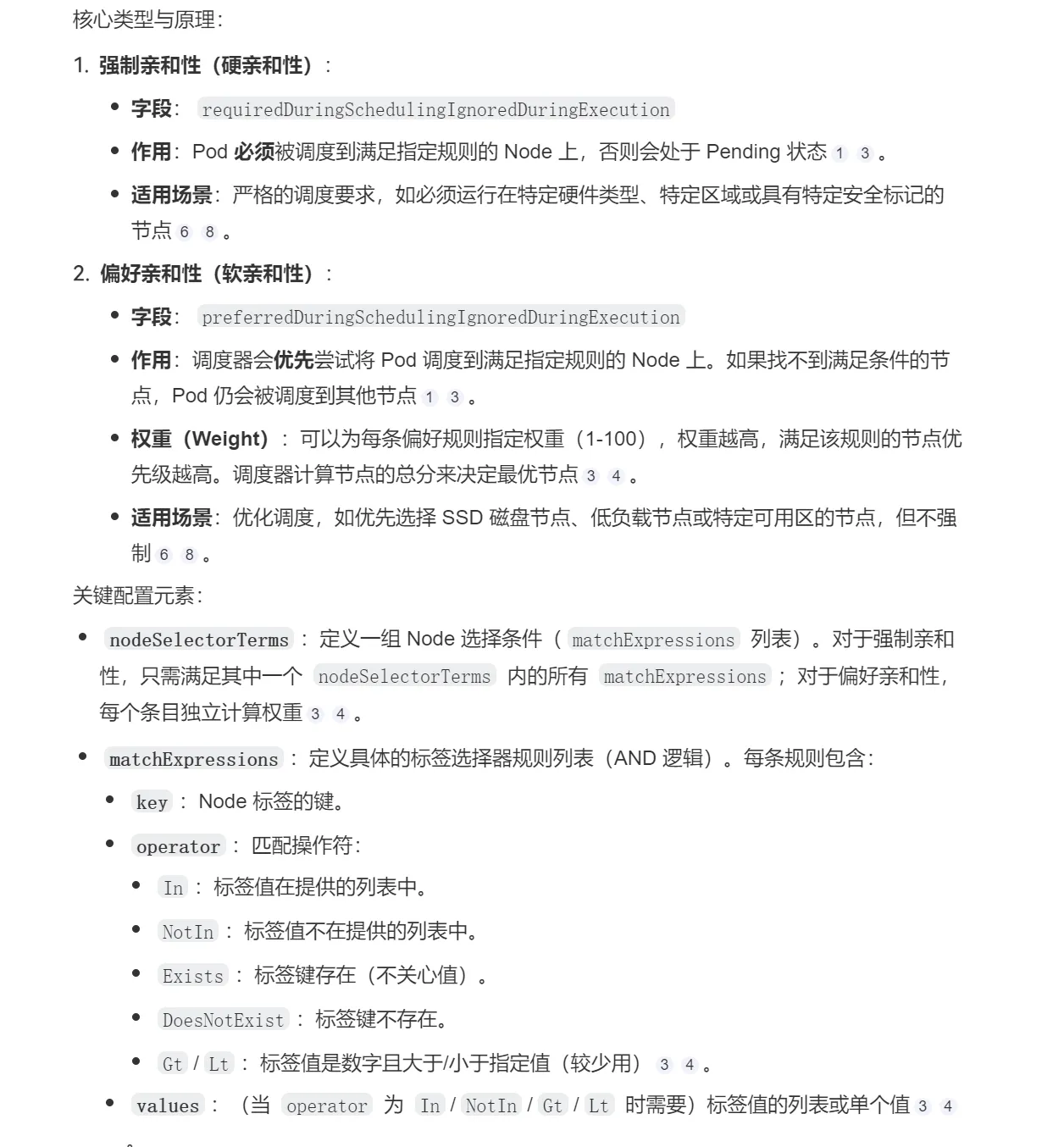

二、亲和性和反亲和性

1.亲和性中的硬亲和性requiredDuringSchedulingIgnoredDuringExecutio

ymlapiVersion: v1

kind: Pod

metadata:

name: nginx01

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: #硬亲和性要求

nodeSelectorTerms:

- matchExpressions:

- key: name #标签为name

operator: In #包含

values:

- songxuan01 #值为songxuan01

containers:

- name: nginx

image: nginx:1.19

使用requiredDuringSchedulingIgnoredDuringExecutio硬亲和性,工作节点标签必须有name标签并且包含songxuan01的值,才能分配到该节点,不然一样会Pending的状态

2.亲和性中的软亲和性preferredDuringSchedulingIgnoredDuringExecution

ymlapiVersion: v1

kind: Pod

metadata:

name: nginx02

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution: #软亲和性要求

- preference:

matchExpressions:

- key: name

operator: In

values:

- songxuan02

weight: 90 #90权重分配到name标签值为songxuan02的节点上

containers:

- name: nginx

image: nginx:1.19

使用preferredDuringSchedulingIgnoredDuringExecution软亲和性,会优先调配到满足条件的节点上,如果没有节点满足条件就会随机分配到任意节点上,不会像硬亲和性一样如果不满足条件就会Pending状态。

3.亲和性中硬亲和性和软亲和性同时使用

yml# 定义Kubernetes API版本和资源类型

apiVersion: apps/v1 # 使用apps/v1版本的API

kind: Deployment # 定义这是一个Deployment资源

# 元数据部分

metadata:

name: example-app # Deployment的名称为example-app

labels: # 为Deployment添加标签

name: songxuan01 # 键值对标签,标识这个应用

# Deployment的规格定义

spec:

replicas: 3 # 指定需要运行的Pod副本数量为3个

# 选择器,用于匹配要管理的Pod

selector:

matchLabels:

name: songxuan01 # 选择带有name=songxuan01标签的Pod

# Pod模板,定义如何创建Pod

template:

metadata:

labels:

name: songxuan01 # 为Pod添加标签,与selector匹配

spec:

# 容器定义

containers:

- name: nginx # 容器名称

image: nginx:1.19 # 使用nginx 1.19版本的镜像

ports:

- containerPort: 80 # 容器暴露的端口号

# 亲和性调度规则

affinity:

nodeAffinity: # 节点亲和性规则

# 必须满足的条件(硬性要求)

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: name # 标签

operator: In # 操作符,表示值必须在指定列表中

values:

- songxuan01 # 必须部署在songxuan01

# 优先满足的条件(软性偏好)

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80 # 权重为80(相对权重)

preference:

matchExpressions:

- key: name # 标签

operator: In

values:

- songxuan02 # 优先选择有songxuan02的节点

requiredDuringScheduling(硬性要求)优先级高于preferredDuringScheduling(软性偏好),如果都满足了要求,硬性要求则会优先;如果都无法满足要求,存在软亲和性会随机分配到某个节点上。

4.亲和性的 topologyKey

ymlapiVersion: v1

kind: Pod

metadata:

name: web-server

labels:

app: web-server

tier: frontend

spec:

affinity:

# 硬性亲和性 - 确保相同Pod不运行在同一节点

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: name

operator: In

values:

- songxuan01

topologyKey: "name" #选择为name的区域

# 软性亲和性 - 优先但不强制调度到特定区域

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100 # 权重值(1-100)

podAffinityTerm:

labelSelector:

matchExpressions:

- key: name

operator: In

values:

- songxuan02

topologyKey: "name"

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

三、

1.节点封锁

标记节点为 SchedulingDisabled,阻止新 Pod 调度到该节点,但已有 Pod 不受影响。

js# 禁止调度 kubectl cordon <node-name> # 恢复调度 kubectl uncordon <node-name>

2.节点污点

通过污点阻止不匹配容忍度的 Pod 调度到节点。

jskubectl taint nodes <node-name> key=value:NoSchedule # 添加污点阻止调度

kubectl taint nodes <node-name> key:NoSchedule- # 移除污点

3.节点驱逐

驱逐节点上所有 Pod 并同时禁止调度。

jskubectl drain <node-name> --ignore-daemonsets --delete-emptydir-data --force

本文作者:松轩(^U^)

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录