请注意,本文编写于 943 天前,最后修改于 934 天前,其中某些信息可能已经过时。

目录

1.环境和服务器准备。

js服务器1:192.168.24.128

服务器2:192.168.24.129

服务器3:192.168.24.130

2.同步hosts文件

jsvi /etc/hosts

js

192.168.24.128 songxuan001

192.168.24.129 songxuan002

3.关闭防火墙

jsservice firewalld stop

4.关闭swap分区

jsswapoff -a

jsvim /etc/fstab # 注释 swap 行

5.同步时间

jsyum install ntpdate -t

jsntpdate -u ntp.aliyun.com

6.安装containerd

jsyum install -y yum-utils device-mapper-persistent-data lvm2

jsyum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装containerd

jsyum install -y containerd.io cri-tools

配置containerd

jscat > /etc/containerd/config.toml <<EOF

disabled_plugins = ["restart"]

[plugins.linux]

shim_debug = true

[plugins.cri.registry.mirrors."docker.io"]

endpoint = ["https://frz7i079.mirror.aliyuncs.com"]

[plugins.cri]

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2"

EOF

开机自启动containerd

jssystemctl enable containerd && systemctl start containerd && systemctl status containerd

containerd配置

jscat > /etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

k8s网络配置

jscat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

加载overlay br_netfilter模块

jsmodprobe overlay modprobe br_netfilter

查看是否生效

jssysctl -p /etc/sysctl.d/k8s.conf

7.查看源

jsyum repolist

8.添加源 x86

jscat <<EOF > kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

ARM选择

jscat << EOF > kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

jsmv kubernetes.repo /etc/yum.repos.d/

9.安装kubelet kubeadm kubectl

jsyum install -y kubelet kubeadm kubectl

添加开启启动kubelet

jssystemctl enable kubelet && sudo systemctl start kubelet && sudo systemctl status kubelet

10.初始化集群,在控制组件进行!

jskubeadm init \

--apiserver-advertise-address=192.168.24.128 \

--pod-network-cidr=10.244.0.0/16 \

--image-repository registry.aliyuncs.com/google_containers

jsmkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

在node节点执行以下命令 :::

shellkubeadm join 192.168.24.128:6443 --token 0s0gkh.v21htsqcusd3oqjv \ --discovery-token-ca-cert-hash sha256:5759b903a4e32bea3410b3e6355ebb667bff4e7141b1c92f8d9e2899eb80a880

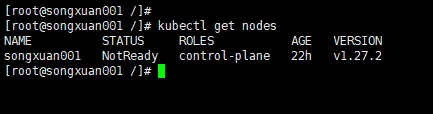

11.测试是否成功

jskubectl get nodes

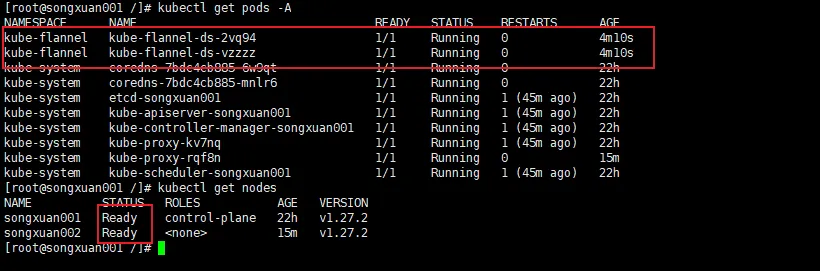

12.配置网络插件

创建一个yaml文件

jsvi kube-flannel.yml

把以下内容复制进去。

yml---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.20.2 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.2

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.20.2 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.2

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

在主节点再执行以下命令

jskubectl apply -f kube-flannel.yml

拉取后网络就好了。

本文作者:松轩(^U^)

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录